Asking seven tough Q’s of AI - & exploring our confusions about radical innovation

A few weeks back we did a laboratory on 'the politics of VR’ in Brighton (check out Phil Teer's report on the event here). At the event, we discussed the possibilities, limitations and challenges of one of the powerful technologies that will likely play a big part in our future lives. And we tried to figure out how we feel about it.

On each table was a blank paper tablecloth and a handful of markers - an open invitation for the participants to scribble down their thought, questions and emotions as the night went along.

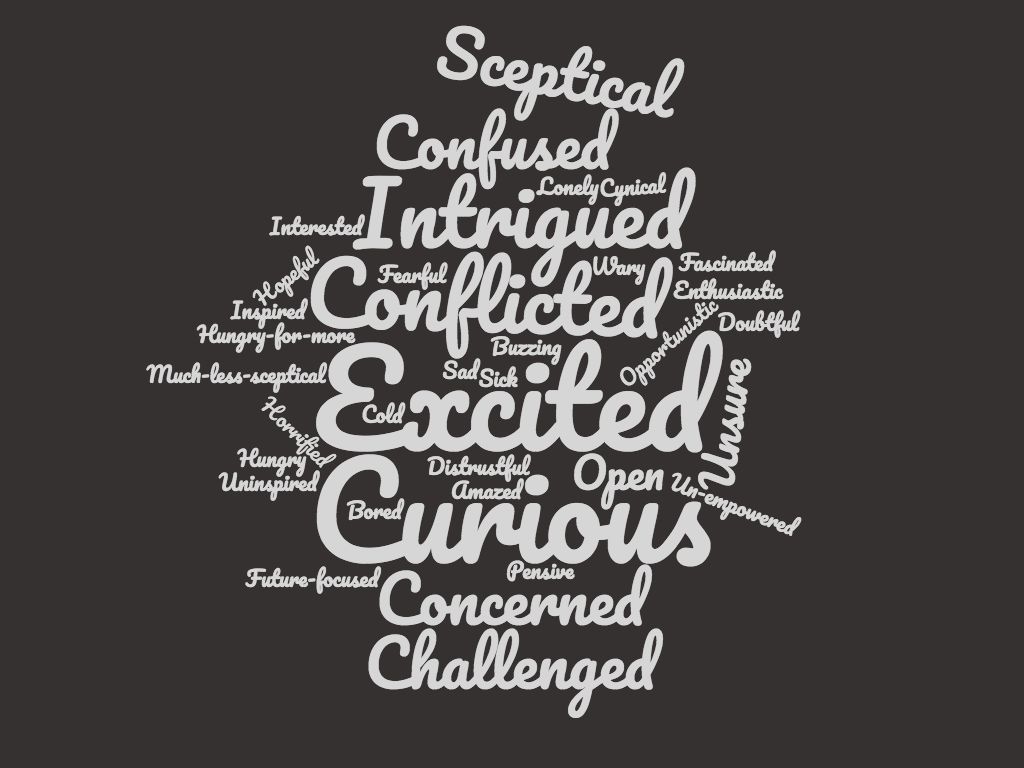

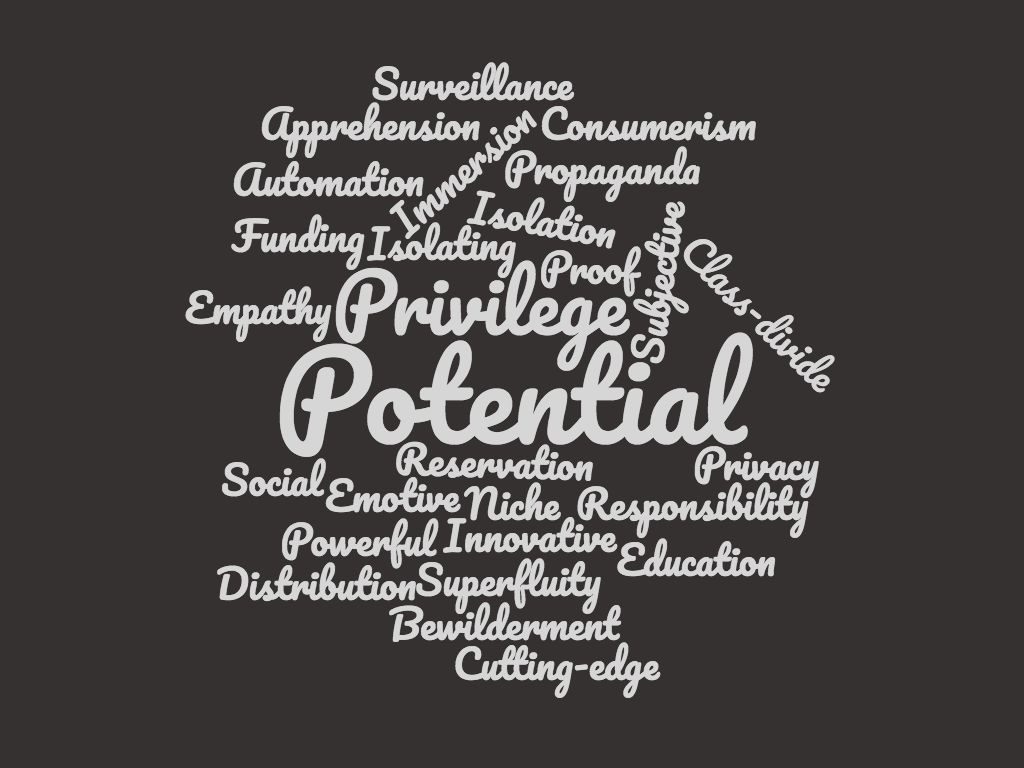

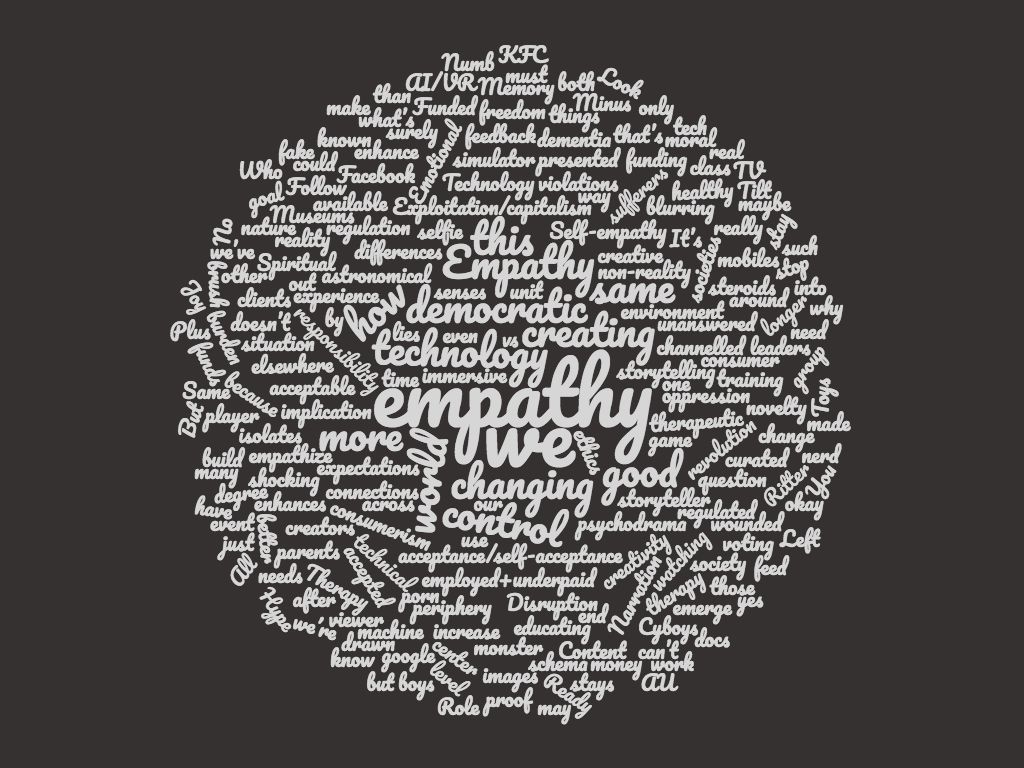

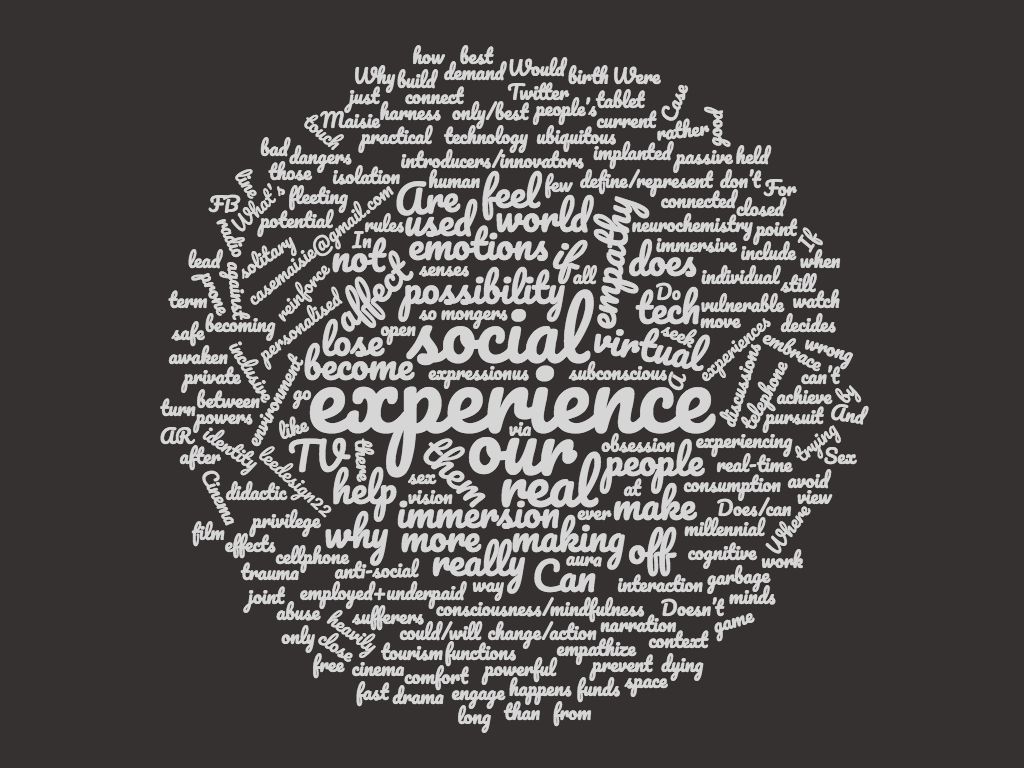

After going through the questions, statements, words and emotions expressed on the tablecloths it became clear that there were a lot of mixed feelings in play. While many expressed excitement and curiosity just as many felt sceptical, concerned... and confused.

Word clouds of all tablecloth comments made at the Brighton lab:

Given the many and sometimes conflicting theories, claims and stories on the future of technology out there, this confusion isn’t surprising. The important thing is that we as communities raise ourselves to the challenges of these powerful technologies, get together to explore our fears and hopes how these become useful in our lives, not just defend ourselves from them. Something we aim to do at many political laboratories in the future.

In a recent essay Robert Brooks - roboticist, author, founder of Rethink Robotics and iRobot - dispels the hysteria that tends to surround the mainstream conversation on the future of artificial intelligence and robotics and explores the common mistakes that distract us from thinking more productively about the future (adapted nicely, and with permission in this article at Technology Review):

I recently saw a story in MarketWatch that said robots will take half of today’s jobs in 10 to 20 years. It even had a graphic to prove the numbers.

The claims are ludicrous. (I try to maintain professional language, but sometimes …) For instance, the story appears to say that we will go from one million grounds and maintenance workers in the U.S. to only 50,000 in 10 to 20 years, because robots will take over those jobs. How many robots are currently operational in those jobs? Zero. How many realistic demonstrations have there been of robots working in this arena? Zero. Similar stories apply to all the other categories where it is suggested that we will see the end of more than 90 percent of jobs that currently require physical presence at some particular site.

Mistaken predictions lead to fears of things that are not going to happen, whether it’s the wide-scale destruction of jobs, the Singularity, or the advent of AI that has values different from ours and might try to destroy us. We need to push back on these mistakes. But why are people making them? I see seven common reasons

He headlines these seven reasons for scepticism about AI:

- overestimating and underestimating

- Imagining magic

- performance versus competence

- suitcase words

- exponentials

- Hollywood scenarios

- speed of deployment

Here's an excerpt - head to Technology Review to read the whole thing.

Overestimating and underestimating

We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run

Roy Amara

GPS started out with one goal, but it was a hard slog to get it working as well as was originally expected. Now it has seeped into so many aspects of our lives that we would not just be lost if it went away; we would be cold, hungry, and quite possibly dead.

We see a similar pattern with other technologies over the last 30 years. A big promise up front, disappointment, and then slowly growing confidence in results that exceed the original expectations. This is true of computation, genome sequencing, solar power, wind power, and even home delivery of groceries.

AI has been overestimated again and again, in the 1960s, in the 1980s, and I believe again now, but its prospects for the long term are also probably being underestimated. The question is: How long is the long term?

Imagining magic

When a distinguished but elderly scientist states that something is possible, he is almost certainly right. When he states that something is impossible, he is very probably wrong.

The only way of discovering the limits of the possible is to venture a little way past them into the impossible.

Any sufficiently advanced technology is indistinguishable from magic.

Arthur C. Clarke

If imagined future technology is far enough away from the technology we have and understand today, then we do not know its limitations. And if it becomes indistinguishable from magic, anything one says about it is no longer falsifiable.

This is a problem I regularly encounter when trying to debate with people about whether we should fear artificial general intelligence, or AGI—the idea that we will build autonomous agents that operate much like beings in the world. I am told that I do not understand how powerful AGI will be. That is not an argument. We have no idea whether it can even exist. I would like it to exist—this has always been my own motivation for working in robotics and AI. But modern-day AGI research is not doing well at all on either being general or supporting an independent entity with an ongoing existence. It mostly seems stuck on the same issues in reasoning and common sense that AI has had problems with for at least 50 years. All the evidence that I see says we have no real idea yet how to build one. Its properties are completely unknown, so rhetorically it quickly becomes magical, powerful without limit.

Nothing in the universe is without limit.

Watch out for arguments about future technology that is magical. Such an argument can never be refuted. It is a faith-based argument, not a scientific argument

Performance versus competence

People hear that some robot or some AI system has performed some task. They then generalize from that performance to a competence that a person performing the same task could be expected to have. And they apply that generalization to the robot or AI system.

Today’s robots and AI systems are incredibly narrow in what they can do. Human-style generalizations do not apply.

Suitcase words

Suitcase words mislead people about how well machines are doing at tasks that people can do. That is partly because AI researchers—and, worse, their institutional press offices—are eager to claim progress in an instance of a suitcase concept. The important phrase here is “an instance.” That detail soon gets lost. Headlines trumpet the suitcase word, and warp the general understanding of where AI is and how close it is to accomplishing more.

Exponentials

Many people are suffering from a severe case of “exponentialism.” When people are suffering from exponentialism, they may think that the exponentials they use to justify an argument are going to continue apace.

Using the example of the iPod which grew from having 10 gigabytes in 2002 to 160 in 2007, extrapolating through to today, we would expect a $400 iPod to have 160,000 gigabytes of memory. But the top iPhone of today (which costs much more than $400) has only 256 gigabytes of memory, less than double the capacity of the 2007 iPod. This particular exponential collapsed very suddenly once the amount of memory got to the point where it was big enough to hold any reasonable person’s music library and apps, photos, and videos. Exponentials can collapse when a physical limit is hit, or when there is no more economic rationale to continue them.

Similarly, we have seen a sudden increase in performance of AI systems thanks to the success of deep learning. Many people seem to think that means we will continue to see AI performance increase by equal multiples on a regular basis. But the deep-learning success was 30 years in the making, and it was an isolated event.

Hollywood scenarios

The plot for many Hollywood science fiction movies is that the world is just as it is today, except for one new twist. In Bicentennial Man, Richard Martin, played by Sam Neill, sits down to breakfast and is waited upon by a walking, talking humanoid robot, played by Robin Williams. Richard picks up a newspaper to read over breakfast. A newspaper! Printed on paper. Not a tablet computer, not a podcast coming from an Amazon Echo–like device, not a direct neural connection to the Internet.

It turns out that many AI researchers and AI pundits, especially those pessimists who indulge in predictions about AI getting out of control and killing people, are similarly imagination-challenged. They ignore the fact that if we are able to eventually build such smart devices, the world will have changed significantly by then. We will not suddenly be surprised by the existence of such super-intelligences. They will evolve technologically over time, and our world will come to be populated by many other intelligences, and we will have lots of experience already. Long before there are evil super-intelligences that want to get rid of us, there will be somewhat less intelligent, less belligerent machines. Before that, there will be really grumpy machines. Before that, quite annoying machines. And before them, arrogant, unpleasant machines. We will change our world along the way, adjusting both the environment for new technologies and the new technologies themselves. I am not saying there may not be challenges. I am saying that they will not be sudden and unexpected, as many people think.

Speed of deployment

A lot of AI researchers and pundits imagine that the world is already digital, and that simply introducing new AI systems will immediately trickle down to operational changes in the field, in the supply chain, on the factory floor, in the design of products.

Nothing could be further from the truth. Almost all innovations in robotics and AI take far, far, longer to be really widely deployed than people in the field and outside the field imagine.